Self explainable graph convolutional recurrent network for spatio-temporal forecasting

Javier García-Sigüenza, Manuel Curado, Faraón Llorens-Largo & José F. Vicent

Machine Learning

Volume 114, article 2 (2025)

doi: doi.org/10.1007/s10994-024-06725-6

Published 14 January 2025

https://link.springer.com/article/10.1007/s10994-024-06725-6

Abstract

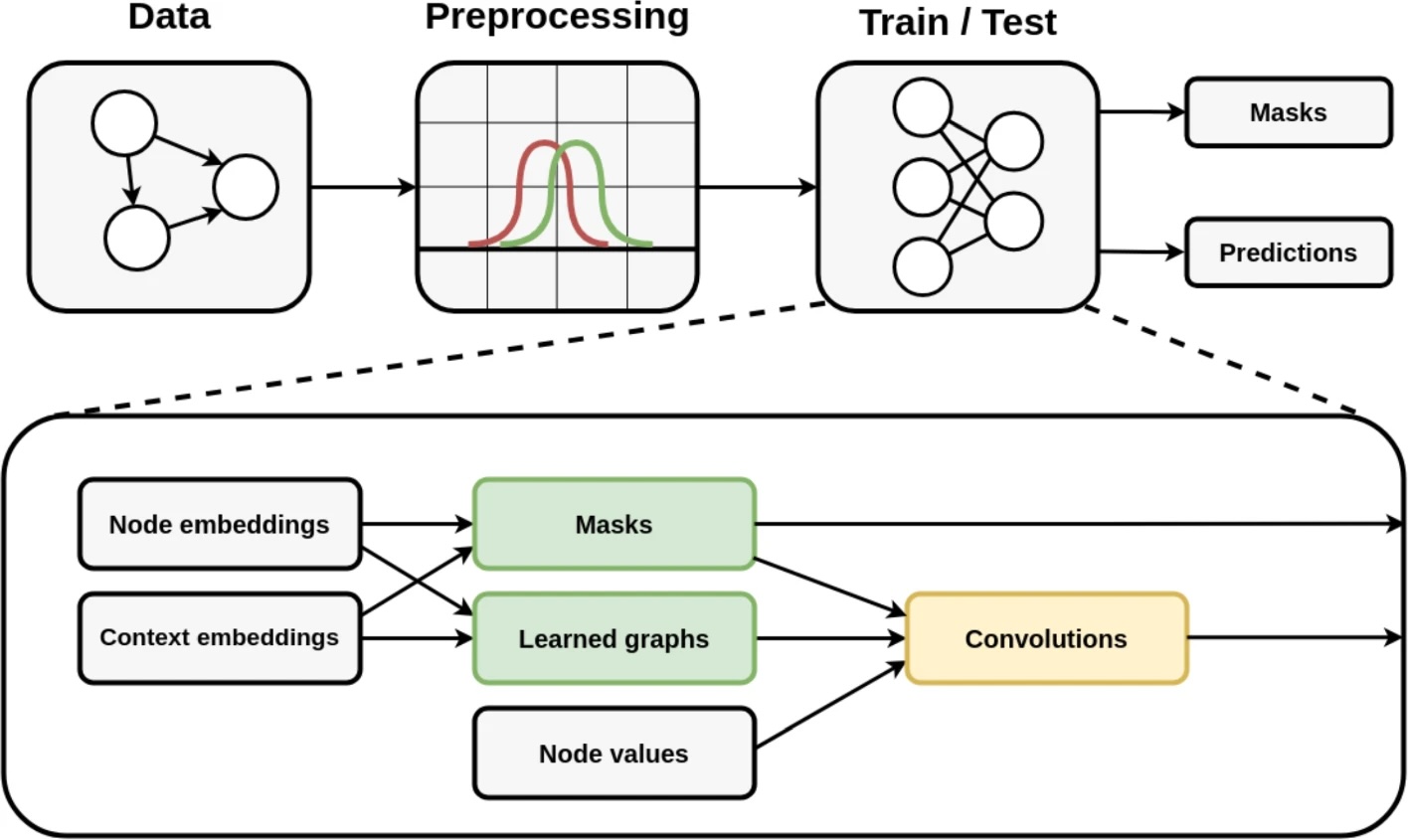

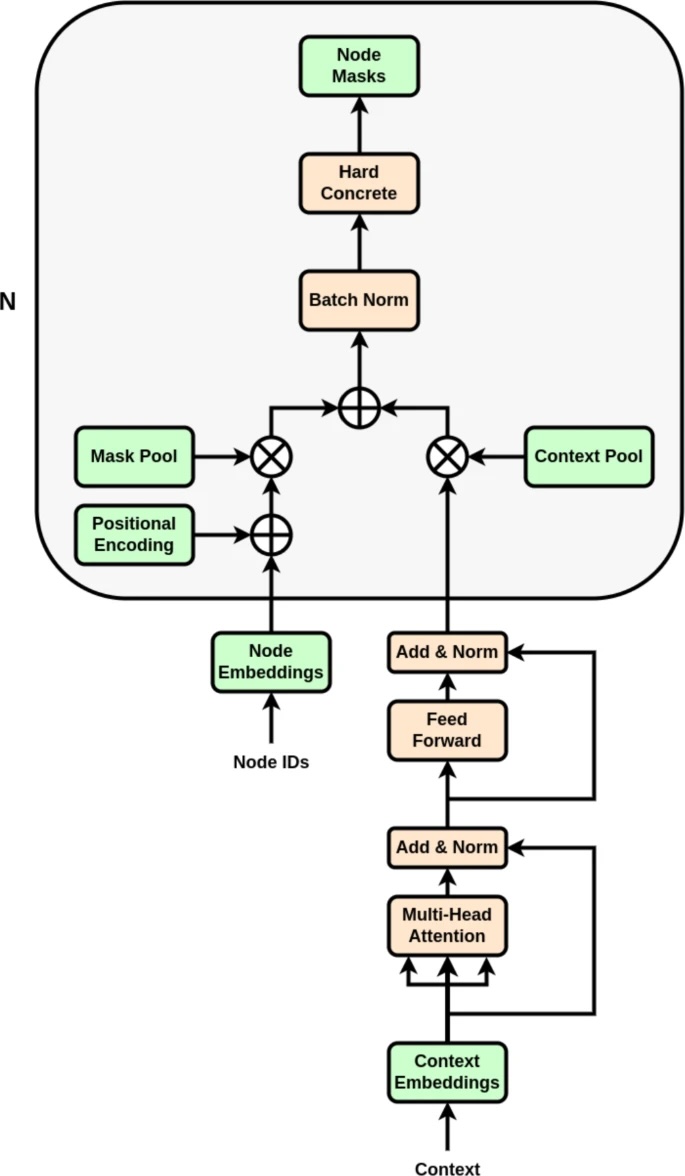

Artificial intelligence (AI) is transforming industries and decision-making processes, but concerns about transparency and fairness have increased. Explainable artificial intelligence (XAI) is crucial to address these concerns, providing transparency in AI decision making, alleviating the effect of biases and fostering trust. However, the application of XAI in conjunction with problems with spatio-temporal components represents a challenge due to the small number of options, which when implemented penalize performance in exchange for the explainability obtained. This paper proposes self explainable graph convolutional recurrent network (SEGCRN), a model that seeks to integrate explainability into the architecture itself, seeking to increase the ability to infer the relationship and dependence between the different nodes, proposing an alternative to explainability techniques, which are applied as a second layer. The proposed model has been able to show in different data sets the ability to reduce the amount of information needed to make a prediction, while reducing the impact on the prediction caused by applying an explainability technique, having managed to reduce the use of information without loss of accuracy. Thus, SEGCRN is proposed as a gray box, which allows a better understanding of its behavior than black box models, having validated the model with traffic data, combining both spatial and temporal components, achieving promising results.

Keywords: Graph neural networks, Deep learning, Data analysis, Explainability, Spatio-temporal forecasting